🧠 Caching in .NET the Right Way — Layers, Patterns & Real-World Code

Discover how to design efficient caching strategies to boost performance and scalability in your .NET apps.

Caching correctly can make your .NET app dramatically faster and far more scalable but done poorly it creates bugs, stale data, and operational headaches. This article walks you through practical caching layers, patterns, code samples, and operational advice so you can design a robust caching strategy for real-world .NET systems.

⚡ TL;DR (Two-line summary)

Use a layered approach:

➡ In-process cache for ultra-fast reads

➡ Distributed cache (Redis) for shared scale

➡ Versioning + invalidation + observability for correctness and reliability

1. Why Multi-layer Caching?

Caching isn’t one-size-fits-all. Different layers serve different needs.

🕐 Latency: In-process caches (

IMemoryCache) return data in microseconds.🌍 Scale & consistency: Distributed caches (Redis) let multiple app instances share state.

💰 Cost: Cache hot paths, not everything — protect the DB from expensive reads.

⚙️ Flexibility: Different access patterns require different cache semantics.

2. Cache layers roles & choices

a) In-process (local) cache

Use for per-instance hot items (computed values, config).

.NET:

IMemoryCache(Microsoft.Extensions.Caching.Memory).Fast, but not shared across instances.

b) Distributed cache

Use for shared data between instances (user sessions, product catalogs).

Options: Redis (common), Memcached (less common for modern .NET), Azure Cache for Redis.

.NET:

IDistributedCacheabstraction or direct client (StackExchange.Redis) for advanced scenarios.

c) CDN / edge caches

For static assets and HTTP responses (images, HTML fragments).

Useful for public-facing read-heavy endpoints.

d) Application-level output/response caching

Use Response Caching, Output Caching or Varnish in front.

In .NET:

ResponseCachingmiddleware or ASP.NET Core Output Caching.

3. Caching Patterns in Action

Let’s use the new HybridCache introduced in .NET 8+ to illustrate core caching patterns.

Here’s a simple setup:

public class Product

{

public int Id { get; set; }

public string Name { get; set; } = string.Empty;

public decimal Price { get; set; }

}public class ProductRepository

{

private readonly Dictionary<int, Product> _db = new();

public ProductRepository()

{

_db[1] = new Product { Id = 1, Name = “Laptop”, Price = 1200 };

_db[2] = new Product { Id = 2, Name = “Phone”, Price = 800 };

}

public Task<Product?> GetByIdAsync(int id)

{

_db.TryGetValue(id, out var product);

Console.WriteLine($”[DB] Get Product {id}”);

return Task.FromResult(product);

}

public Task UpdateAsync(Product product)

{

_db[product.Id] = product;

Console.WriteLine($”[DB] Updated Product {product.Id}”);

return Task.CompletedTask;

}

}

Cache Service

public class HybridCacheService

{

private readonly HybridCache cache;

private readonly ProductRepository repository;

public HybridCacheService(HybridCache cache, ProductRepository repository)

{

this.cache = cache;

this.repository = repository;

}

#region 1. Cache-Aside (Lazy Loading)

public async Task<Product?> GetProductCacheAsideAsync(int id)

{

string cacheKey = $”Product_{id}”;

return await cache.GetOrCreateAsync(

cacheKey,

async token => await repository.GetByIdAsync(id),

new HybridCacheEntryOptions { Expiration = TimeSpan.FromMinutes(10) });

}

#endregion

#region 2. Read-Through

public async Task<Product?> GetProductReadThroughAsync(int id)

{

string cacheKey = $”Product_{id}”;

return await cache.GetOrCreateAsync(

cacheKey,

async token => await repository.GetByIdAsync(id),

new HybridCacheEntryOptions { Expiration = TimeSpan.FromMinutes(10) });

}

#endregion

#region 3. Write-Around

public async Task UpdateProductWriteAroundAsync(Product product)

{

// Write directly to DB only

await repository.UpdateAsync(product);

// Cache not updated

Console.WriteLine(”[Cache-Write-Around] Skipped cache update”);

}

#endregion

#region 4. Write-Back (Write-Behind)

public async Task UpdateProductWriteBackAsync(Product product)

{

string cacheKey = $”Product_{product.Id}”;

// Update cache first

await cache.SetAsync(cacheKey, product, new HybridCacheEntryOptions

{

Expiration = TimeSpan.FromMinutes(10)

});

Console.WriteLine(”[Cache-Write-Back] Updated cache, DB async”);

// Simulate background DB update

_ = Task.Run(async () => await repository.UpdateAsync(product));

}

#endregion

#region 5. Write-Through

public async Task UpdateProductWriteThroughAsync(Product product)

{

string cacheKey = $”Product_{product.Id}”;

// Update DB first

await repository.UpdateAsync(product);

// Update cache immediately

await cache.SetAsync(cacheKey, product, new HybridCacheEntryOptions

{

Expiration = TimeSpan.FromMinutes(10)

});

Console.WriteLine(”[Cache-Write-Through] Updated cache & DB”);

}

#endregion

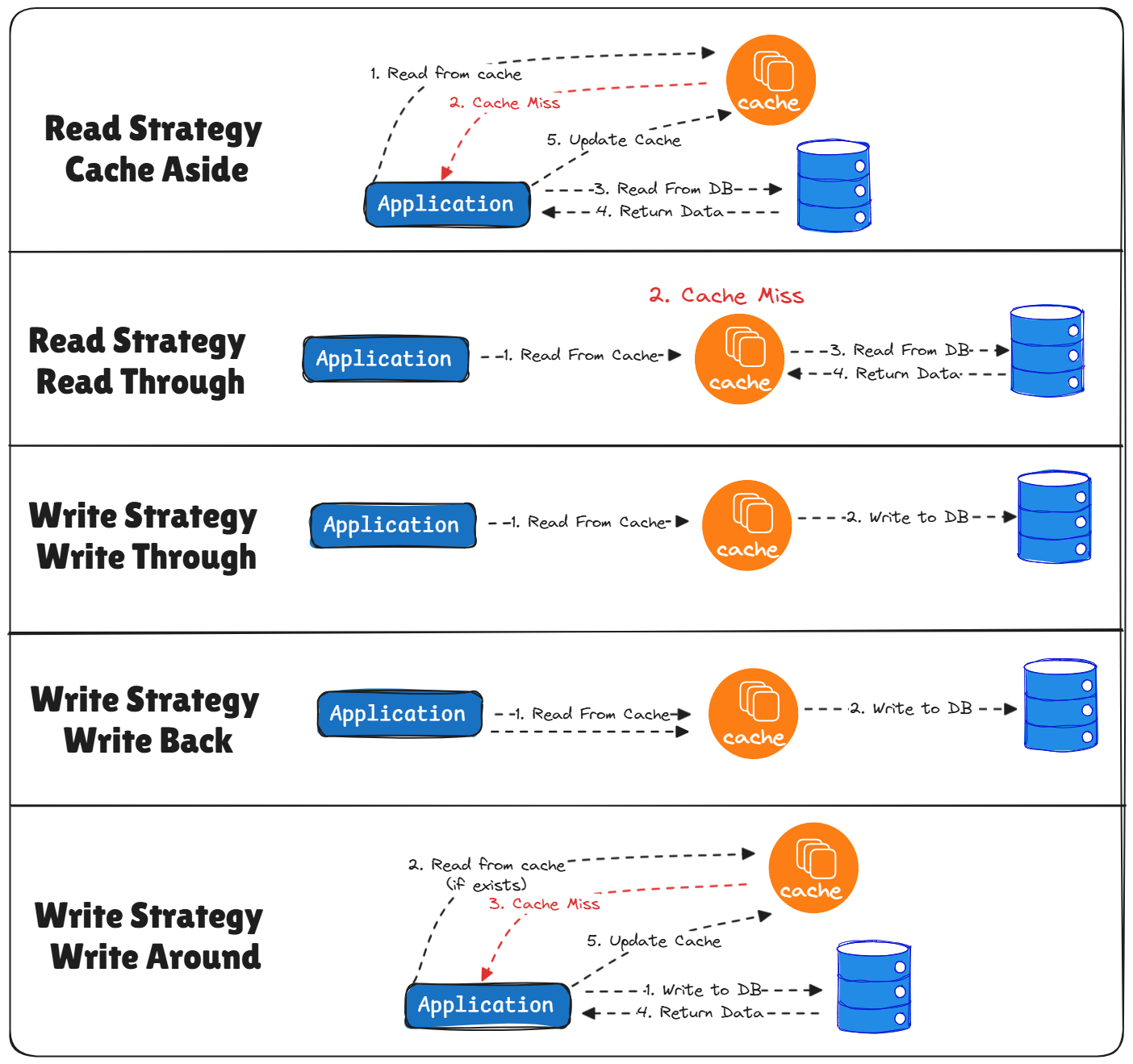

}4. Caching patterns (when to use which)

Cache-Aside (lazy loading)

App checks cache.

On miss, load from DB, store in cache, then return.

Pros: simple, explicit control.

Cons: potential stampede on high-concurrency misses.

Read-Through / Write-Through

Cache client (or a library) automatically fetches or writes through to backing store.

Better for centralizing caching logic; often used in distributed caches with middleware.

Write-Behind (async write-back)

Writes first to cache, then asynchronously to DB.

Use cautiously: risk of data loss on crashes.

Write-Around Caching

Avoid polluting the cache with data that may not be read soon after writing.

4. Consistency & Invalidation Strategies

Consistency is often harder than caching itself. Choose your invalidation model wisely:

⏰ Time-based TTL: Simple expiration; best for mostly-read or eventually consistent data.

🔄 Event-driven invalidation: Publish Redis Pub/Sub or message-bus events on data change.

🧩 Versioned keys: Add a version suffix —

product:123:v42— to invalidate old entries.🎯 Partial invalidation: Invalidate specific keys instead of global cache flushes.

5. Preventing Cache Stampede (a.k.a. Thundering Herd)

When multiple requests miss the cache simultaneously:

🔒 Use distributed locks (e.g., Redis RedLock).

🚦 Apply the Singleflight pattern to deduplicate concurrent requests.

🔁 Implement in-flight caching — reuse in-progress tasks for the same key.

6. Key Design & Serialization

Use predictable, readable keys like

product:{id}:detail:v3.Avoid embedding JSON into keys.

Use MessagePack or protobuf for large objects.

Compress only if your payloads are big enough to justify CPU cost.

7. Security & Operational Considerations

🔐 Don’t cache sensitive/PII data unless encrypted.

🌍 Keep Redis close to your app (same region).

🧮 Tune Redis eviction policies (

volatile-lru,allkeys-lru, etc.).📊 Monitor

IMemoryCachesize and Redis memory usage.🧠 Always track hit/miss ratios and latency metrics.

8. Observability & Metrics

Monitor your caching layer like a first-class system component:

Cache hit/miss rate (overall & per key group)

Average latency for

GetandSetoperationsEvictions and expiration events

Redis connection health and timeout rates

Export metrics to Prometheus or Application Insights

9. Local Development & Testability

Use in-memory cache or local Redis for testing.

Abstract caching behind an

ICacheServiceinterface for mocks.Unit-test both cache-hit and cache-miss cases.

✅ Takeaways

Caching is more than just speed — it’s about data correctness, resilience, and cost-efficiency.

✔ Start simple — use IMemoryCache for fast local reads.

✔ Add a distributed cache (Redis / HybridCache) for scale.

✔ Implement proper invalidation and observability early.

✔ Evolve your cache strategy as your traffic and data patterns grow.

❓What Do You Think?

Caching can make or break your system’s performance - so I’m curious:

👉 Which caching mistake or pattern has caused you the most trouble (or biggest win) in your .NET projects?

Share your thoughts or war stories in the comments, I’d love to hear how you’ve handled caching in production.

👉 Full working code available at:

🔗https://sourcecode.kanaiyakatarmal.com/HybridCache

I hope you found this guide helpful and informative.

Thanks for reading!

If you enjoyed this article, feel free to share it and follow me for more practical, developer-friendly content like this.